In this article, I’ll walk you through on how to setup a K3s cluster. Next, how to create a ceph cluster on top of this.

Finally, will look in to how to completely remove the Ceph cluster and later on how we can remove the k3s cluster as well.

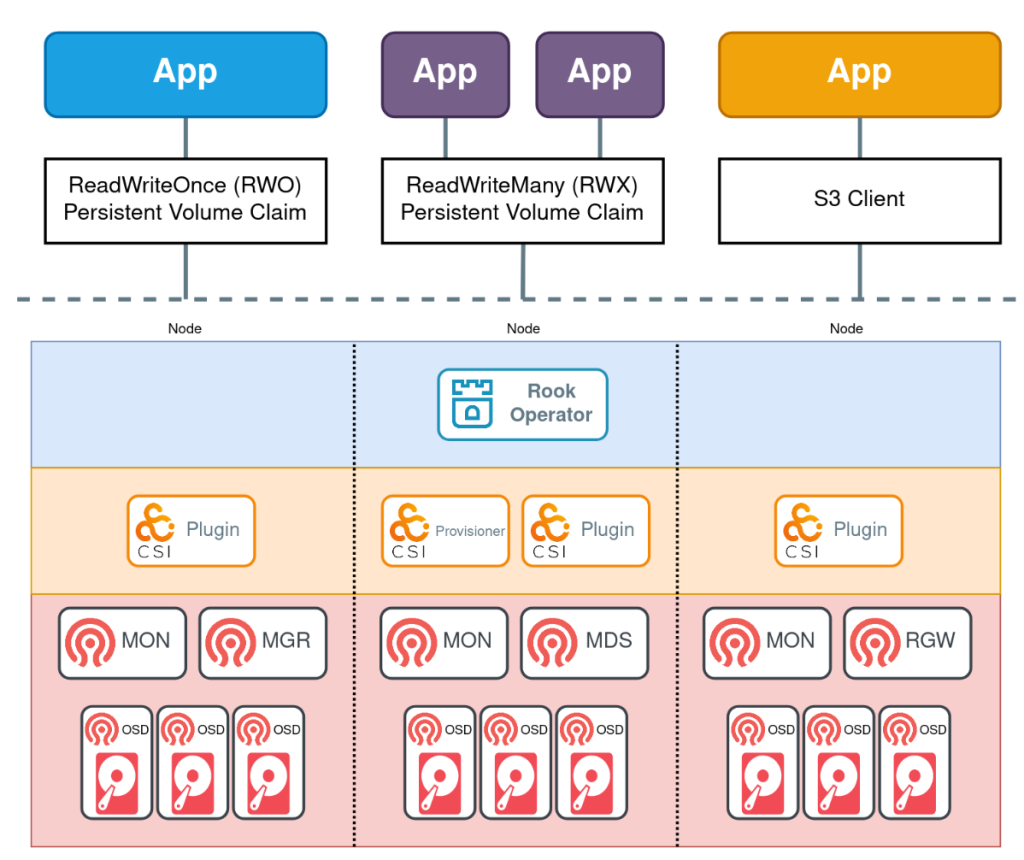

Ceph is a highly scalable, open-source distributed storage system that provides object, block, and file storage in a unified system. It is designed to run on commodity hardware, delivering high performance, reliability, and scalability.

Rook is an open-source cloud-native storage orchestrator for Kubernetes, designed to automate the deployment, management, and scaling of storage services. It allows for integration of various storage solutions within a Kubernetes cluster. Rook transforms storage systems into self-managing, self-scaling, and self-healing storage services.

Rook-Ceph combines the Rook orchestrator with the Ceph storage system, providing a powerful, production-ready storage solution for Kubernetes environments.

There are many features of using Rook-Ceph. One reason is the unified-storage with supports for object, block and file storage. Another would be the flexibility Ceph would bring to the table along with self-healing and self-managing nature. Scalability and Automation are few other advantages and features of Rook-Ceph.

Since the purpose of this article is to get some hands on experience, let’s jump into action…!

Prerequisites:

In this setup I’ve used 3 RHEL9.4 VMs. These nodes would be used as both control and worker nodes.

The hostnames and IP mapping are as follows.

k3s-ctrl1 192.168.8.128

k3s-ctrl2 192.168.8.195

k3s-ctrl3 192.168.8.138

In this setup I used 8GB RAM with 4 vCPUs for all 3 VMs. However, if you are actually trying to use the cluster for a significant amount of testing, it would be highly recommended to go with higher specs, at least for the k3s-ctrl1 node. Please note that all above 3 nodes should have access with with each other. From the above IPs you could observe that those are members of 192.168.8.0/24 subnet.

Moreover, I’ve attached 6 disks of 30 GB as OSD (Object Storage Daemon). Each VM has 2 OSDs. I’ve set this up in Virtual Box, therefore has attached Virtual Hard Disks to be used as OSD.

Most importantly, never try this within your own laptop/desktop. Always set it up within a VM. This is because Ceph has a notorious reputation of using the machine’s (laptop’s/ desktop’s) file system as it’s own.

Setting up the K3s Cluster

First, login to the k3s-ctrl1 node which I was going to use as the master node.

Then, execute the below command to install k3s on the node.

curl -sfL https://get.k3s.io | sh -The above will install k3s on the master node.

As the next step, we have to get the K3S_TOKEN. To do this we can cat the following file on the same master node.

cat /var/lib/rancher/k3s/server/node-tokenOnce we obtain the token, we can execute the below command within the remaining two instances.

curl -sfL https://get.k3s.io | K3S_URL=https://<master_node_ip:192.168.8.128>:6443 K3S_TOKEN=<token from the cat /var/lib/rancher/k3s/server/node-token command> sh -This will install the k3s agent within the remaining two nodes and those two nodes will join the cluster.

Can confirm the node status by executing the below command.

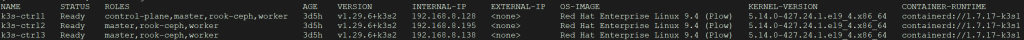

kubectl get node -o wideThe output should be similar to the below.

In addition to above, we could make all three or if preferred, selected number of nodes labeled as worker nodes.

Below commands would make all 3 nodes as worker nodes. But, this is not a must and can change according to your preference.

kubectl label node k3s-ctrl1 node-role.kubernetes.io/worker=

kubectl label node k3s-ctrl2 node-role.kubernetes.io/worker=

kubectl label node k3s-ctrl3 node-role.kubernetes.io/worker=If you want to verify the cluster is working properly, can try a simple deployment like below.

kubectl create deployment nginx --image=nginxThat’s it for the K3s Cluster setup!

Rook Ceph Installation

First, download from the git location.

git clone --single-branch --branch v1.14.8 https://github.com/rook/rook.git Next, cd to the rook/deploy/examples location and execute the below kubectl command to apply the Custom Resource Definitions, common and operator related deployments.

cd rook/deploy/examples

kubectl create -f crds.yaml -f common.yaml -f operator.yamlMake sure the operator pod within rook-ceph namespace is in a running state.

kubectl get pods -n rook-cephNext, let’s create a cluster-custom.yaml within the same location.

Here, I’ve prepared the disks according to my requirement.

apiVersion: ceph.rook.io/v1

kind: CephCluster

metadata:

name: rook-ceph

namespace: rook-ceph

spec:

cephVersion:

image: quay.io/ceph/ceph:v18.2.2

allowUnsupported: false

dataDirHostPath: /var/lib/rook

skipUpgradeChecks: false

continueUpgradeAfterChecksEvenIfNotHealthy: false

waitTimeoutForHealthyOSDInMinutes: 10

upgradeOSDRequiresHealthyPGs: false

mon:

count: 3

allowMultiplePerNode: false

mgr:

count: 2

allowMultiplePerNode: false

modules:

- name: rook

enabled: true

dashboard:

enabled: true

port: 8443

ssl: true

monitoring:

enabled: false

metricsDisabled: false

network:

connections:

encryption:

enabled: false

compression:

enabled: false

requireMsgr2: false

crashCollector:

disable: false

logCollector:

enabled: true

periodicity: daily

maxLogSize: 500M

cleanupPolicy:

confirmation: ""

sanitizeDisks:

method: quick

dataSource: zero

iteration: 1

allowUninstallWithVolumes: false

removeOSDsIfOutAndSafeToRemove: false

priorityClassNames:

mon: system-node-critical

osd: system-node-critical

mgr: system-cluster-critical

storage:

useAllNodes: false

useAllDevices: true

config:

databaseSizeMB: "1024"

nodes:

- name: "k3s-ctrl1"

devices:

- name: "sdb"

- name: "sdc"

- name: "k3s-ctrl2"

devices:

- name: "sdb"

- name: "sdc"

- name: "k3s-ctrl3"

devices:

- name: "sdb"

- name: "sdc"

onlyApplyOSDPlacement: false

disruptionManagement:

managePodBudgets: true

osdMaintenanceTimeout: 30

pgHealthCheckTimeout: 0

csi:

readAffinity:

enabled: false

healthCheck:

daemonHealth:

mon:

disabled: false

interval: 45s

osd:

disabled: false

interval: 60s

status:

disabled: false

interval: 60s

livenessProbe:

mon:

disabled: false

mgr:

disabled: false

osd:

disabled: false

startupProbe:

mon:

disabled: false

mgr:

disabled: false

osd:

disabled: false

Finally, create the cluster with the below command.

kubectl create -f cluster-custom.yamlIn addition to this we can setup the ceph took box as well. The relevant manifest file is located within the same location (ceph/deploy/examples).

kubectl apply -f toolbox.yamlWith this, the ceph status can be checked.

Eg: commands to view the ceph status and ceph OSD tree are as below.

# List the available pods

kubectl get pods -n rook-cephLet’s assume, rook-ceph-tools-58c6857df4-7qrwp is the ceph-tool pod’s name.

kubectl exec -it -n rook-ceph rook-ceph-tools-58c6857df4-7qrwp -- ceph status

kubectl exec -it -n rook-ceph rook-ceph-tools-58c6857df4-7qrwp -- ceph osd treeCeph Status output could show HEALTH_OK or sometimes can be HEALTH_WARN 😃.

Ceph OSD tree would be shown with the ceph OSD tree command.

If you’ve followed until now, well done!

That’s it for the Rook-Ceph Cluster Deployment!

In the upcoming articles, we’ll discuss about how to remove this ceph and K3s Cluster entirely while cleaning up the OSDs and in another article let’s see how to leverage the CephFS to mount the shared file system to all the pods and how to make use of the CephNFS (Ganesha service) to mount the CephFS storage to the host file system.

Until next time, see you 👋

References:

- https://docs.k3s.io/quick-start

Pingback: Creating a CephFS and Exposing it as NFS - Tech by K